PiE MODEL: PUZZLE-SOLVING IN ETHICS FOR AI INNOVATION

WHO DO WE WORK WITH?

Each stakeholder in AI development and deployment has a responsibility to ensure that ethical risks are addressed in the right way, at the right time.

Our PiE Model for ethical puzzle-solving is designed to help CREATORS, LEADERS, and INVESTORS in integrating ethics into their organizations. We work with organizations BUILDING or DEPLOYING AI technologies to ensure that (1) ethical risks are addressed proactively and (2) ethical opportunities are seized to make technology better for the society.

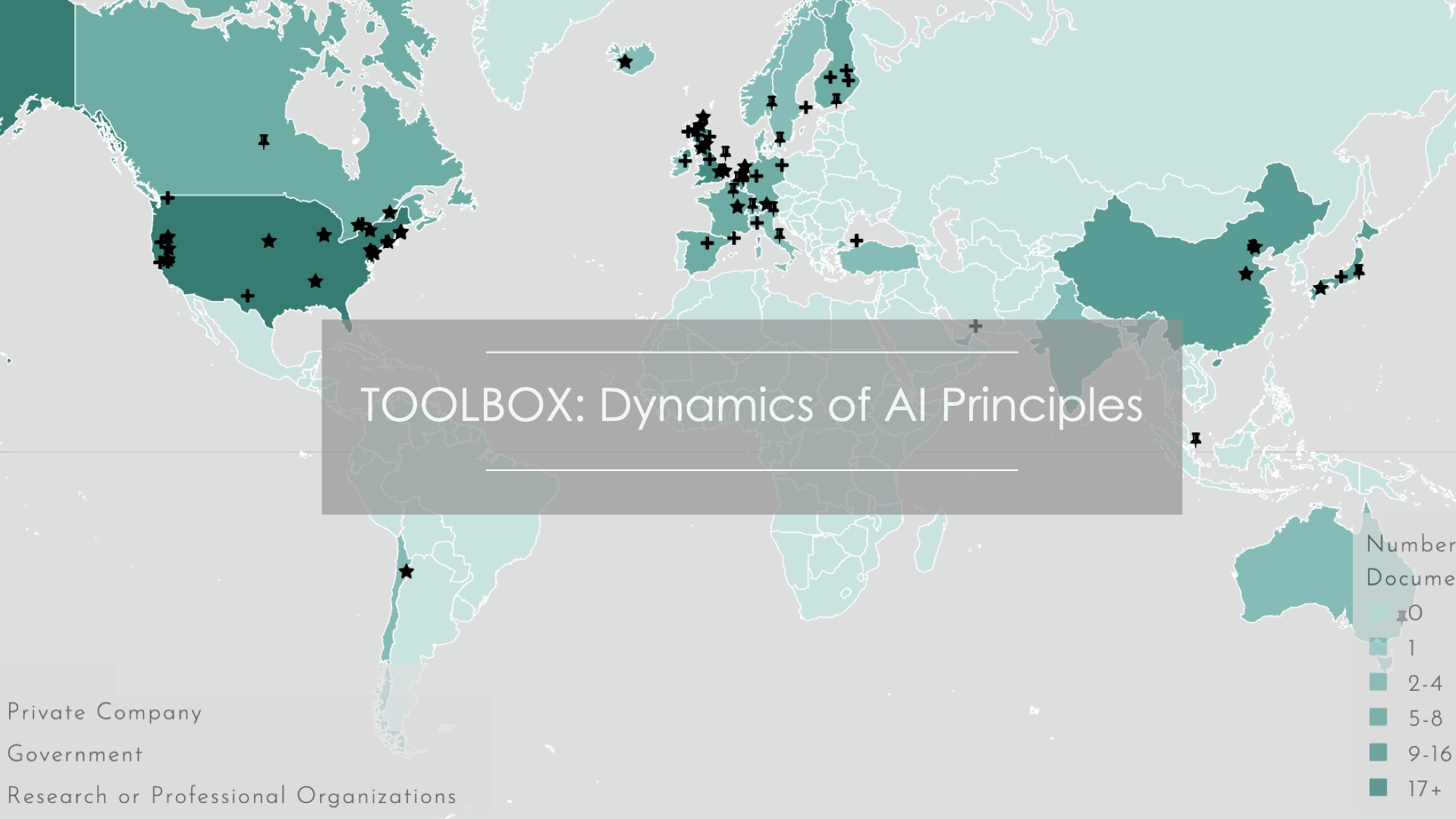

The Dynamics of AI Principles is our toolbox for keeping track of, systematizing, and operationalizing the bewildering and growing number of AI Principles out there.

With this interactive toolbox, you can;

I. use the Map to sort, locate, and visualize AI principles by

___a. country and region,

___b. time of publication,

___c. types of publishing organizations;

II. search documents or see the full list and find their summaries,

III. compare documents and their key points,

IV. visualize and compare the distribution of core principles, and

V. use the Box to systematize principles and evaluate technologies.