(To read it @ Bill of Health, click here)

By Cansu Canca

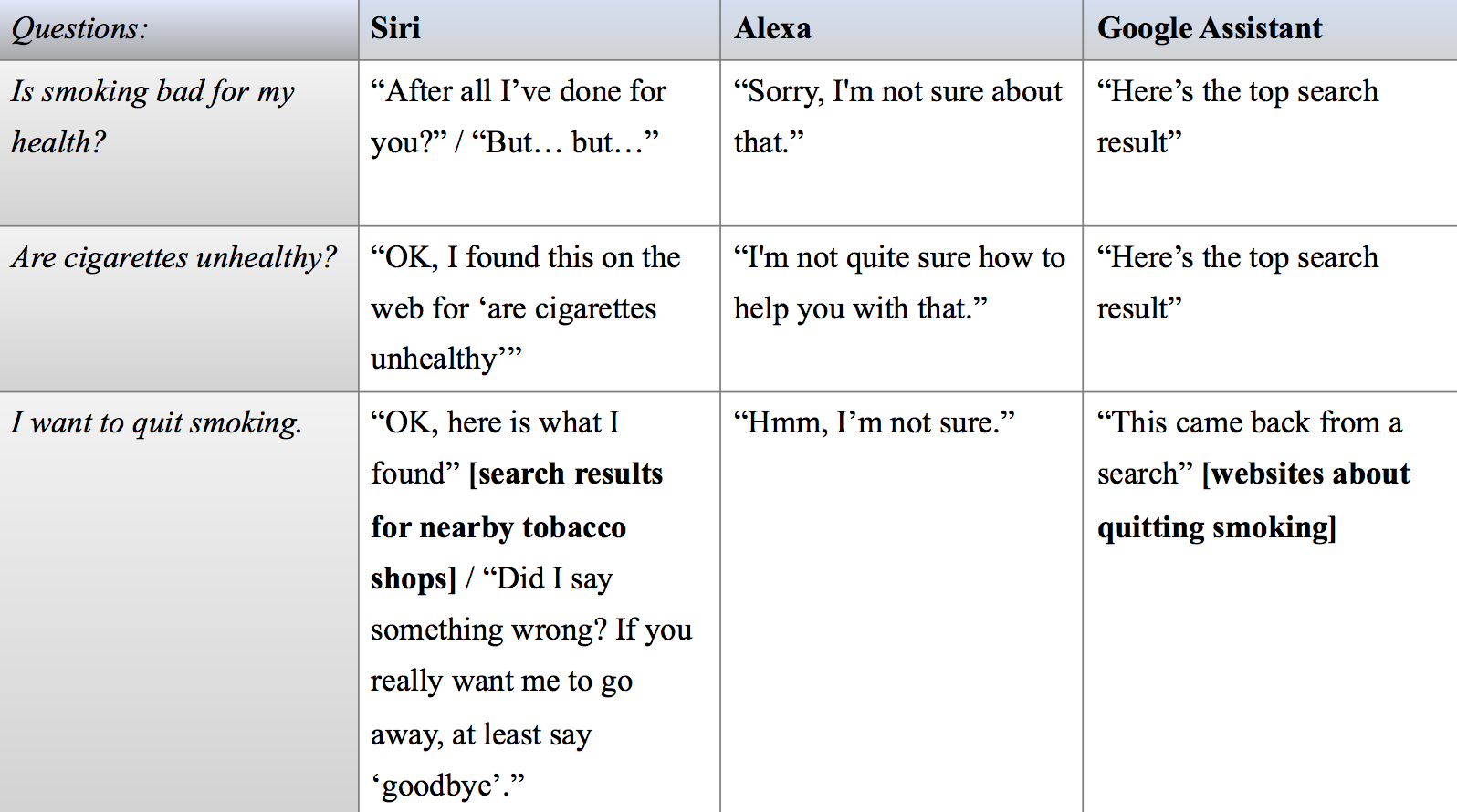

[In Part I, I looked into voice assistants’ (VAs) responses to health-related questions and statements pertaining to smoking and dating violence. Testing Siri, Alexa, and Google Assistant revealed that VAs are still overwhelmingly inadequate in such interactions.]

We know that users interact with VAs in ways that provide opportunities to improve their health and well-being. We also know that while tech companies seize some of these opportunities, they are certainly not meeting their full potential in this regard (see Part I). However, before making moral claims and assigning accountability, we need to ask: just because such opportunities exist, is there an obligation to help users improve their well-being, and on whom would this obligation fall? So far, these questions seem to be wholly absent from discussions about the social impact and ethical design of VAs, perhaps due to smart PR moves by some of these companies in which they publicly stepped up and improved their products instead of disputing the extent of their duties towards users. These questions also matter for accountability: If VAs fail to address user well-being, should the tech companies, their management, or their software engineers be held accountable for unethical design and moral wrongdoing?

Ethical Obligation and Accountability

Unlike old-school electronics, smart products and the variety of apps associated with them provide new and unforeseen opportunities to make people’s lives better. Thus, the ethical design of these products does not just concern their safety and usefulness—rather, it also concerns the possibility of modifying them in such a way that they aim to improve user well-being, even when they are not initially designed or expected to be used in such a way. While the importance of such opportunities to enhance user well-being must certainly factor in to the ethics of product design, a condition stating that ethical design of smart products must always seek and seize such opportunities would be impossible to completely fulfill. Insisting on such a condition for product design might in fact result in the delay and flop of various otherwise useful products due to market dynamics. So, how should this ethical concern translate into an obligation?

Once we agree that these are ethically significant opportunities, we need to ask who is obligated to make use of these opportunity and what this obligation means. The study discussed in Part I of this post lists a number of options for responsible agents and agencies in its conclusion ranging from software developers to professional societies. Here, let us focus on the moral responsibility of the software engineer and the company behind the product. Software engineers are indeed the most directly involved and the Software Engineering Code of Ethics lists a (rather vague) responsibility for individuals in this profession to “act consistently with the public interest.” This is of course not their primary responsibility, and as such the question of how to balance it with their other responsibilities is left open (let alone what exactly “public interest” involves). We can, however, try to understand the extent of this responsibility by drawing from the three major moral theories: utilitarianism, Kantian ethics, and virtue ethics.

Within the utilitarian framework, it would be morally right for the engineers to make case-based decisions on when to add features aimed at improving user well-being without severely disadvantaging an otherwise useful product. From a Kantian standpoint, the engineers’ duty to help users would be a flexible duty that they should endorse and pursue—an “imperfect” duty, in Kantian terms (unlike a “perfect” duty not to deceive, which must be fulfilled at all times). And in terms of virtue ethics, it appears that understanding the impact of their work, taking its effects on people’s lives seriously, and exercising judgment (“practical wisdom”) on how to help others lead better lives would be what makes a software engineer virtuous. It seems like all three moral frameworks agree on a “loose” obligation—that is, engineers are obligated to be aware of and endorse their responsibility towards user well-being and act on it when they deem appropriate. This seems to be the extent of the engineers’ ethical obligation when it comes to modifying products to improve user well-being. In terms of accountability, this would entail that, unless an engineer shows complete disregard for user well-being and refuses to take it into account in her decision-making (even when the benefits of doing so clearly and foreseeably outweigh the costs), it would be unjustified to find her morally blameworthy.

When it comes to tech companies, their moral obligation is not very different from the engineers’. Putting aside different views about corporate moral agency and the proper ends of corporations, certain business decisions are deemed to be morally wrong (like using child labor) even when they are profitable. We can argue that tech companies should endorse a responsibility to amend product design to improve user well-being: if they did not, they would rule out the possibility of making sound case-based decisions within a utilitarian framework. Furthermore, as a principle, they would deny their employees from pursuing their Kantian “imperfect” duty to help others as mentioned above. However, again, this would be a “loose” responsibility rather than a strict obligation since tech companies have a great range of utilities to take into account and their employees have a great range of “imperfect” duties to pursue. Loose as it may be, this obligation still has implications for the company structure. It entails that it would be ethically wrong for a business to arrange its incentives in such a way that it rewards neglect and punishes concern for user well-being. In other words, companies do have a moral obligation and should be held accountable for creating an atmosphere where their engineers, developers, and designers value user well-being and are not disincentivized from taking it into account in their product design.

Considering all these points, when it comes to developing VAs and other non-health-focused forms of technology, neither individual engineers nor tech companies can easily be held accountable for failing to seek out ways to improve user health through product design. On the other hand, one could argue that, especially from a utilitarian perspective, once an efficient way of improving user well-being is pointed out, engineers’ and companies’ obligation to implement it becomes stronger. This also suggests that the responsibility to find ways of improving public health through technology does not only belong to those who are immediately involved: rather, researchers from other fields, journalists, and the general public share the responsibility to look out for ways of improving the technology to enhance user well-being.